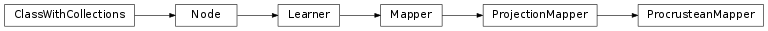

mvpa2.mappers.procrustean.ProcrusteanMapper¶

-

class

mvpa2.mappers.procrustean.ProcrusteanMapper(space='targets', **kwargs)¶ Mapper to project from one space to another using Procrustean transformation (shift + scaling + rotation).

Training this mapper requires data for both source and target space to be present in the training dataset. The source space data is taken from the training dataset’s

samples, while the target space is taken from a sample attribute corresponding to thespacesetting of the ProcrusteanMapper.See: http://en.wikipedia.org/wiki/Procrustes_transformation

Notes

Available conditional attributes:

calling_time+: Noneraw_results: Nonetrained_dataset: Nonetrained_nsamples+: Nonetrained_targets+: Nonetraining_time+: None

(Conditional attributes enabled by default suffixed with

+)Methods

Initialize instance of ProcrusteanMapper

Parameters: scaling : bool, optional

Estimate a global scaling factor for the transformation (no longer rigid body). Constraints: value must be convertible to type bool. [Default: True]

reflection : bool, optional

Allow for the data to be reflected (so it might not be a rotation. Effective only for non-oblique transformations. Constraints: value must be convertible to type bool. [Default: True]

reduction : bool, optional

If true, it is allowed to map into lower-dimensional space. Forward transformation might be suboptimal then and reverse transformation might not recover all original variance. Constraints: value must be convertible to type bool. [Default: True]

oblique : bool, optional

Either to allow non-orthogonal transformation – might heavily overfit the data if there is less samples than dimensions. Use

oblique_rcond. Constraints: value must be convertible to type bool. [Default: False]oblique_rcond : float, optional

Cutoff for ‘small’ singular values to regularize the inverse. See

lstsqfor more information. Constraints: value must be convertible to type ‘float’. [Default: -1]svd : {numpy, scipy, dgesvd}, optional

Implementation of SVD to use. dgesvd requires ctypes to be available. Constraints: value must be one of (‘numpy’, ‘scipy’, ‘dgesvd’). [Default: ‘numpy’]

enable_ca : None or list of str

Names of the conditional attributes which should be enabled in addition to the default ones

disable_ca : None or list of str

Names of the conditional attributes which should be disabled

demean : bool

Either data should be demeaned while computing projections and applied back while doing reverse()

auto_train : bool

Flag whether the learner will automatically train itself on the input dataset when called untrained.

force_train : bool

Flag whether the learner will enforce training on the input dataset upon every call.

space : str, optional

Name of the ‘processing space’. The actual meaning of this argument heavily depends on the sub-class implementation. In general, this is a trigger that tells the node to compute and store information about the input data that is “interesting” in the context of the corresponding processing in the output dataset.

pass_attr : str, list of str|tuple, optional

Additional attributes to pass on to an output dataset. Attributes can be taken from all three attribute collections of an input dataset (sa, fa, a – see

Dataset.get_attr()), or from the collection of conditional attributes (ca) of a node instance. Corresponding collection name prefixes should be used to identify attributes, e.g. ‘ca.null_prob’ for the conditional attribute ‘null_prob’, or ‘fa.stats’ for the feature attribute stats. In addition to a plain attribute identifier it is possible to use a tuple to trigger more complex operations. The first tuple element is the attribute identifier, as described before. The second element is the name of the target attribute collection (sa, fa, or a). The third element is the axis number of a multidimensional array that shall be swapped with the current first axis. The fourth element is a new name that shall be used for an attribute in the output dataset. Example: (‘ca.null_prob’, ‘fa’, 1, ‘pvalues’) will take the conditional attribute ‘null_prob’ and store it as a feature attribute ‘pvalues’, while swapping the first and second axes. Simplified instructions can be given by leaving out consecutive tuple elements starting from the end.postproc : Node instance, optional

Node to perform post-processing of results. This node is applied in

__call__()to perform a final processing step on the to be result dataset. If None, nothing is done.descr : str

Description of the instance

Methods